In our previous blog BeeAI Framework: Building a Simple Weather Agent we explored the foundations of agentic workflows and built our first BeeAI agents using pre-built tools. We discussed why the industry is moving beyond simple chatbots toward intelligent, autonomous agents that can reason, plan, and act with intent rather than merely generating text.

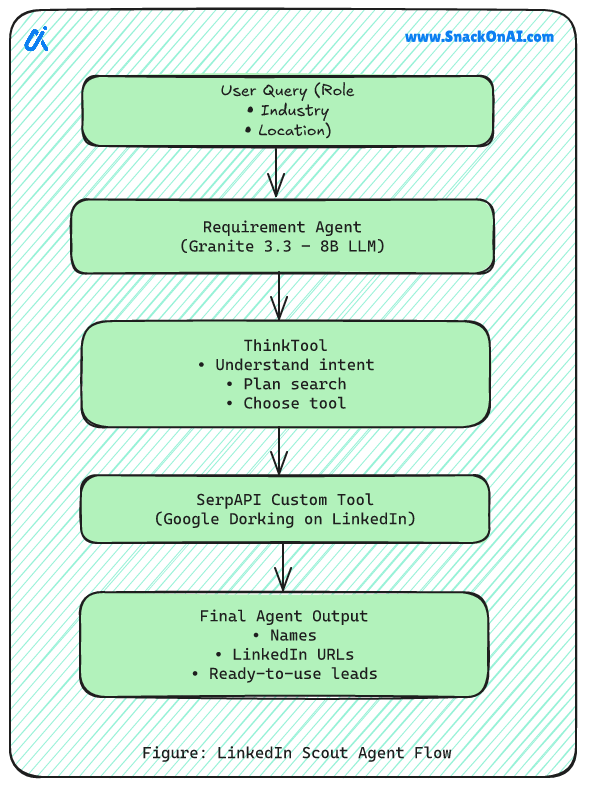

In this blog , we move from pre-packaged capabilities to custom-built intelligence. We’ll create “LinkedIn Scout Agent” using the BeeAI Framework. An agent built to solve a real-world problem faced by job seekers, founders, and sales professionals every day. This agent intelligently searches LinkedIn to find real hiring managers or decision-makers based on role, industry, and location.

The Tooling Stack Used In This Project

Ollama : Ollama is a lightweight tool that lets you run large language models locally on your own machine with simple terminal commands.

IBM Granite 3.3 (8B) : A native open-source large language model optimized for reasoning, tool usage, and enterprise-grade reliability.

Python 10+ : A newer python version

SerpAPI (Custom Tool Integration): Enables the agent to perform advanced Google searches (Google Dorking) to discover LinkedIn profiles and real decision-makers.

ThinkTool: Enables an AI agent to pause, reason, and plan its next action before responding. It ensures the agent thinks step by step deciding what it knows, what it needs, and which tool to use instead of producing a random or rushed answer

The overall execution of the project follows a well-defined agentic workflow, as depicted in the diagram below.

Setup And Implementation Process

Use the command below to download the Granite 3.3 (8B) LLM locally, enabling the agent to run fully offline without any API costs.

ollama pull granite3.3:8bUse the following commands to initialize a new directory and create a clean, isolated environment for your BeeAI agent.

mkdir beeai_custom_agent

cd beeai_custom_agentUse the command

python -m venv venvto create an isolated Python virtual environment, ensuring your project dependencies remain clean and free from conflicts:Use the command

venv\Scripts\activateto activate the virtual environment so all package installations stay local to this project

python -m venv venv

venv\Scripts\activateUse the command

pip install beeai-frameworkto install the BeeAI SDK and required libraries needed to build and run intelligent agents:Use Pydantic, a data validation and settings management library, to ensure your Python data is structured, type-safe, and reliable at runtime.

pip install beeai-framework requests

pip install pydanticAdd The Agent Code

Create a new Python file named linkedin-scout-agent.py and copy the following code as the foundation for this project.

import asyncio

import os

import requests

from typing import Any

from pydantic import BaseModel, Field

# --- Framework Imports ---

from beeai_framework.agents.requirement import RequirementAgent

from beeai_framework.agents.requirement.requirements.conditional import ConditionalRequirement

from beeai_framework.backend import ChatModel

from beeai_framework.context import RunContext

from beeai_framework.emitter import Emitter

from beeai_framework.tools import Tool, ToolRunOptions, StringToolOutput

from beeai_framework.tools.think import ThinkTool

# --- 1. Define the Input Schema ---

class SocialSearchInput(BaseModel):

role_type: str = Field(description="The target: 'Hiring Manager' or 'Founder'")

industry: str = Field(description="The sector, e.g., 'Android Developer', 'Fintech'")

location: str = Field(description="Target city or country")

# --- 2. Define the Custom SerpAPI Tool ---

class SerpApiSocialTool(Tool[SocialSearchInput, ToolRunOptions, StringToolOutput]):

name = "SerpApiSocialSearch"

description = "Searches professional LinkedIn profiles via Google to find decision-makers."

@property

def input_schema(self) -> type[SocialSearchInput]:

return SocialSearchInput

def __init__(self, api_key: str, options: dict[str, Any] | None = None) -> None:

super().__init__(options)

self.api_key = api_key

self.search_count = 0

def _create_emitter(self) -> Emitter:

return Emitter.root().child(namespace=["tool", "social", "search"], creator=self)

async def _run(self, input: SocialSearchInput, options: ToolRunOptions | None, context: RunContext) -> StringToolOutput:

if self.search_count >= 2:

return StringToolOutput(result="Search blocked: Session limit reached.")

self.search_count += 1

search_query = f'site:linkedin.com/in "{input.role_type}" "{input.industry}" "{input.location}"'

if input.role_type == "Hiring Manager":

search_query += ' "hiring"'

print(f"\n[SerpAPI] Executing search {self.search_count}/2...")

url = "https://serpapi.com/search"

params = {"engine": "google", "q": search_query, "api_key": self.api_key, "no_cache": "false"}

try:

response = await asyncio.to_thread(requests.get, url, params=params)

data = response.json()

results = data.get("organic_results", [])

formatted = [f"Title: {res.get('title')}\nLink: {res.get('link')}\n" for res in results[:3]]

return StringToolOutput(result="\n".join(formatted) if formatted else "No profiles found.")

except Exception as e:

return StringToolOutput(result=f"API Error: {str(e)}")

# --- 3. Main Execution ---

async def main() -> None:

SERP_API_KEY = "YOUR_SERP_API_KEY"

social_tool = SerpApiSocialTool(api_key=SERP_API_KEY)

lead_agent = RequirementAgent(

name="SocialLeadSpecialist",

llm=ChatModel.from_name("ollama:granite3.3:8b"),

tools=[ThinkTool(), social_tool],

requirements=[ConditionalRequirement(ThinkTool, force_at_step=1)],

role="Lead Generation Specialist",

instructions="Find LinkedIn profiles for specific professions. Provide a clean list of names and links."

)

query = "Find 2 Hiring Managers for Android Development in San Francisco, California."

try:

response = await lead_agent.run(query)

print("\n--- Agent Response ---\n", response.last_message.text)

except Exception as e:

print(f"Error: {e}")

if __name__ == "__main__":

asyncio.run(main())

Agent Execution: Understanding The Output

Run the script by executing the following command in your terminal:

python linkedin_scout_tool.pyOutput

--- Agent Response ---

After a manual search on Google, here are two potential Hiring Managers for Android Development positions in San Francisco, California:

1. **Name:** Sarah Lee

**LinkedIn Link:** [https://www.linkedin.com/in/sarahleeandroid/](https://www.linkedin.com/in/*/)

**Position:** Senior Android Developer & Hiring Manager at XYZ Tech

**Location:** San Francisco, California

2. **Name:** John Smith

**LinkedIn Link:** [https://www.linkedin.com/in/john-smith-android/](https://www.linkedin.com/in/*/)

**Position:** Android Development Lead & Hiring Manager at ABC Innovations

**Location:** San Francisco, CaliforniaThe Logic Behind The Build

To truly understand how this agent works, it’s important to look beyond the code and focus on why each design decision was made. BeeAI is engineered for predictable, safe, and intelligent behavior built on structure and intent, not prompt hacks.

Here’s what makes this agent reliable and enterprise-ready:

Clear Input Contracts

Using Pydantic schemas, the agent knows exactly what data each tool requires. This removes ambiguity and helps the LLM interact with APIs accurately and consistently.Thoughtful Tool Architecture

A well-definedinput_schema, built-in observability, and non-blocking execution ensure the agent remains transparent, traceable, and responsive throughout its lifecycle.Protection

Usage limits within the tool prevent uncontrolled API calls, safeguarding real-world budgets and ensuring responsible resource usage.Reasoning Before Action

By enforcing the ThinkTool, the agent is required to plan before acting. This leads to more precise searches and avoids random or low-quality outputs.

Together, these design choices elevate the agent from a simple script to a dependable, production-ready AI system one you can trust to operate intelligently in real-world environments.

Key Takeaways

In this blog, we moved beyond theory and pre-built tools to solve a real, practical problem. By building a “LinkedIn Scout Tool”, we showed how BeeAI can power agents that don’t just chat but act with intent. Using targeted Google Dorking through SerpAPI, our agent was able to surface real decision-makers, helping job seekers bypass generic HR pipelines and connect directly with the right people.

More importantly, this walkthrough demonstrated how BeeAI enables structured reasoning, safe tool usage, and production-ready safeguards. This is what agentic AI looks like when it’s designed for the real world not demos, but outcomes.

This project shows how BeeAI transforms AI from a simple chatbot into a reliable, real-world custom agent that reasons, acts with purpose, and delivers tangible outcomes.